Making everyday backups, while the data remain safe for years, may seem to be redundant and needless. Therefore, people realize, how stupid it was not to have backups, only when their data get lost (it happened to me too, quite a long time ago). However, backing up does not have to be a dull process. It can serve other purposes too…

In this article, I’m going to share the solution, that I personally use to back up this site. When looking for it, I wanted it to meet the following requirements:

- I did not want to backup the whole server. It’s quite easy to setup a new one (on DigitalOcean, in my case). The real problem is to restore the configuration, code and user data (e.g., databases), so these are the things, that should be backed up.

- Backups should be made at least once a day (DigitalOcean offers backups once a week). It’s much easier to recall, what you’ve done during the day (and to redo), than to restore, what was done during, e.g., a week.

- The backup data should be stored on a separate server, preferably in a separate network and ideally on a computer at my home (my site is not at home, of course).

- The solution should backup only those things, which have changed since the last backup. This would involve less network load and would make the process be faster. Also, this would prevent the data from being transferred over the network without a need (what can be considered to be dangerous).

- Also, I wanted this backup solution to be open source.

In this way, I came to the decision to use ownCloud for my backups. Among the reasons are:

- ownCloud has a command line client, that can be installed on a server, that does not run X (as in my case).

- ownCloud is available in native Debian repositories.

- Files from server are backed up to an external cloud and can be also synced to my desktop computer (if I install the ownCloud client, what I did).

- You can have your own ownCloud server or use 1Gb on ownDrive.com for free.

- It meets all my requirements.

So, I installed the ownCloud console client using the following command:

# apt-get install owncloud-client-cmd

The console client still uses many libraries from the X environment, therefore the aforementioned command will install them as well. But, don’t worry – it won’t install X, only some of its libraries.

It should also be noted, that ownCloud syncs files in two directions. That is, it can update your file on the server, if it believes, that the version of the file in the could is newer. This was one of the problems, that I faced. In my case, these were bugs in the client, but, anyway, server system files should never be updated from the cloud!

So, to prevent the server files from being updated by the ownCloud client, I run it under a special dedicated user, that does not have write access to such files (the id command shows details about the user):

$ id sync uid=4(sync) gid=34(backup) groups=34(backup)

Certainly, I made sure, that all the files, that I want to backup, are readable by this user. Also, I checked, that no such files are world-writable. Otherwise, the ownCloud client would still be able to override them.

Another problem is, that the ownCloud client needs to store its state files in the directory, it synchronizes, so it should be able to write and update such state files there. Therefore, I changed the group of all such directories to backup (luckily, it was safe to) and made them writable by this group:

# chgrp backup <directory> # chmod g+w <directory>

However, it should also be recalled, that, if a user is able to write new files to a directory, it will also be able to rename files of that directory, even if it’s not able to modify or delete them directly (yeah, I faced this problem too). This can be solved by the sticky bit, that does not allow users to rename files, that they do not own:

# chmod +t <directory>

Now, it’s safe to run the following command to backup the directory $local_dir:

$ su - sync -s /bin/sh -c "owncloudcmd -u $user -p $password $local_dir $remote_location"

Here, $remote_location is the URL to a directory in the cloud, that should already exist and initially be empty (see also the code below).

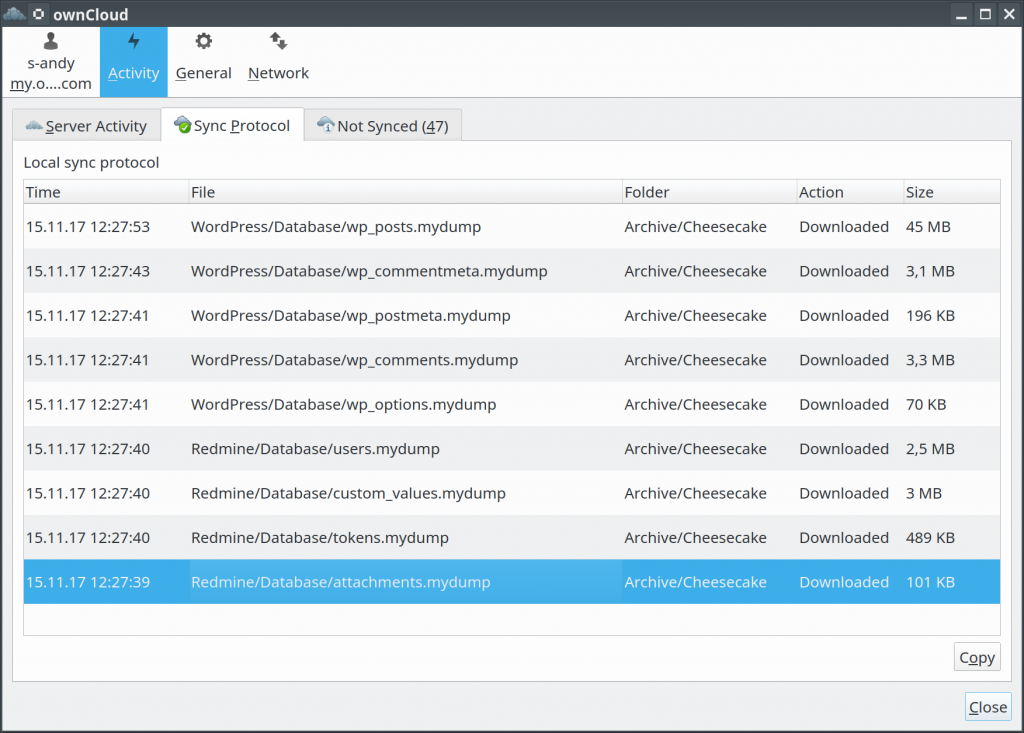

In addition to the cloud storage, I prefer to have the data, that are backed up from the server, on my local computer as well. Therefore, I also installed the ownCloud client on my desktop and pointed it to the cloud, where the data are stored. As a result, now I can see, what data have been changed on the server since the last backup.

This introduces a couple of new benefits of this ownCloud-based backup solution:

- You can see, if and when the backups are made. The backup process can fail too and with this solution you will know, when this happens.

- It’s another way to be notified about changes on the server. Thus, if a user submits an issue to Redmine, you will know this, as its

issuestable will get updated (I backup dump of each table separately). - If an intruder modifies or adds a system file (under a directory, that you backup, of course), you will see, that it was updated.

In this way, this ownCloud-based solution can serve not only for the backup purpose.

At last, here is the code of the script for backing up data (I put it into /etc/cron.daily/, what makes it run once a day):

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 | #!/bin/sh svn=/usr/bin/svn owncloud=/usr/bin/owncloudcmd svnadmin=/usr/bin/svnadmin mysqldump=/usr/bin/mysqldump safe_owncloud=/usr/local/bin/safe_owncloud BACKUPS_DIR=/root/Backups OWNDRIVE_URL=<owncloud.host.com>/remote.php/webdav OWNDRIVE_USERNAME=<username> OWNDRIVE_PASSWORD=<password> OWNDRIVE_ARGS="--silent --non-interactive --user $OWNDRIVE_USERNAME --password $OWNDRIVE_PASSWORD" OWNDRIVE=ownclouds://$OWNDRIVE_URL REDMINE_PASSWORD=<password> WORDPRESS_PASSWORD=<password> MYSQLDUMP_ARGS="--compact --skip-tz-utc --add-drop-table" # Backup configuration $safe_owncloud $OWNDRIVE_ARGS /etc/apache2 $OWNDRIVE/Configuration/Apache 2> /dev/null $safe_owncloud $OWNDRIVE_ARGS /etc/postfix $OWNDRIVE/Configuration/Postfix 2> /dev/null $safe_owncloud $OWNDRIVE_ARGS /etc/redmine $OWNDRIVE/Configuration/Redmine 2> /dev/null $safe_owncloud $OWNDRIVE_ARGS /etc/ssh $OWNDRIVE/Configuration/OpenSSH 2> /dev/null $safe_owncloud $OWNDRIVE_ARGS /etc/cron.daily $OWNDRIVE/Configuration/Cron 2> /dev/null $safe_owncloud $OWNDRIVE_ARGS /etc/wordpress $OWNDRIVE/Configuration/WordPress 2> /dev/null # Backup system tools $safe_owncloud $OWNDRIVE_ARGS /usr/local/bin $OWNDRIVE/Tools 2> /dev/null # Backup database for TABLE in `mysql -u redmine -p$REDMINE_PASSWORD redmine -E -e 'SHOW TABLES' | grep '^Tables_in_redmine:' | cut -b20-`; do $mysqldump -u redmine -p$REDMINE_PASSWORD $MYSQLDUMP_ARGS redmine $TABLE > $BACKUPS_DIR/Redmine/$TABLE.mydump2 if cmp -s $BACKUPS_DIR/Redmine/$TABLE.mydump $BACKUPS_DIR/Redmine/$TABLE.mydump2; then rm $BACKUPS_DIR/Redmine/$TABLE.mydump2 else mv $BACKUPS_DIR/Redmine/$TABLE.mydump2 $BACKUPS_DIR/Redmine/$TABLE.mydump fi done $owncloud $OWNDRIVE_ARGS $BACKUPS_DIR/Redmine $OWNDRIVE/Redmine/Database 2> /dev/null for TABLE in `mysql -u wordpress -p$WORDPRESS_PASSWORD wordpress -E -e 'SHOW TABLES' | grep '^Tables_in_wordpress:' | cut -b22-`; do $mysqldump -u wordpress -p$WORDPRESS_PASSWORD $MYSQLDUMP_ARGS wordpress $TABLE > $BACKUPS_DIR/WordPress/$TABLE.mydump2 if cmp -s $BACKUPS_DIR/WordPress/$TABLE.mydump $BACKUPS_DIR/WordPress/$TABLE.mydump2; then rm $BACKUPS_DIR/WordPress/$TABLE.mydump2 else mv $BACKUPS_DIR/WordPress/$TABLE.mydump2 $BACKUPS_DIR/WordPress/$TABLE.mydump fi done $owncloud $OWNDRIVE_ARGS $BACKUPS_DIR/WordPress $OWNDRIVE/WordPress/Database 2> /dev/null # Backup code $safe_owncloud $OWNDRIVE_ARGS /usr/share/redmine $OWNDRIVE/Redmine/Code 2> /dev/null $safe_owncloud $OWNDRIVE_ARGS /usr/share/wordpress $OWNDRIVE/WordPress/Code 2> /dev/null # Backup subversion # to restore: svnadmin create <repo>; svnadmin load <repo> < <repo>.svndump for SVN_REPOSITORY in /var/lib/svn/*; do REPOSITORY_DATE=`$svn info file://$SVN_REPOSITORY | grep '^Last Changed Date' | cut -d' ' -f4-5 | tr -cd '[:digit:]'` BACKUP_DATE=`stat -c %y $BACKUPS_DIR/Subversion/${SVN_REPOSITORY##*/}.svndump 2> /dev/null | cut -d. -f1 | tr -cd '[:digit:]'` if [ "x$BACKUP_DATE" = "x" ] || [ $REPOSITORY_DATE -gt $BACKUP_DATE ]; then $svnadmin dump $SVN_REPOSITORY > $BACKUPS_DIR/Subversion/${SVN_REPOSITORY##*/}.svndump 2> /dev/null fi done $owncloud $OWNDRIVE_ARGS $BACKUPS_DIR/Subversion $OWNDRIVE/Subversion 2> /dev/null # Backup attachments/files $safe_owncloud $OWNDRIVE_ARGS /var/lib/redmine $OWNDRIVE/Redmine/Attachments 2> /dev/null $safe_owncloud $OWNDRIVE_ARGS /var/lib/wordpress/wp-content $OWNDRIVE/WordPress/Content 2> /dev/null |

Here, you can see the code, which is used to:

- Dump each table of Redmine and WordPress databases, but do not store the resulting dump, if it does not contain changes.

- Dump each subversion repository, but only if it has new commits.

Finally, here is what’s inside /usr/local/bin/safe_owncloud (this was discussed above):

1 2 3 4 5 6 7 8 9 10 11 | #!/bin/sh su=/bin/su owncloudcmd=/usr/bin/owncloudcmd OWNCLOUD_USER=sync OWNCLOUD_SHELL=/bin/sh $su - $OWNCLOUD_USER -s $OWNCLOUD_SHELL -c "$owncloudcmd $*" exit 1 |

Comments (2)

The next step can be using EncFS, what is quite possible with this solution. All files can be encrypted before uploading them to the cloud and decrypted after downloading them from there to the home computer.

Referenced by Owncloud Backup – TricksDream 4 years ago

Also available in: Atom

Add a comment